[AINews] Kolmogorov-Arnold Networks: MLP killers or just spicy MLPs? • ButtondownTwitterTwitter

Chapters

AI Twitter Recap and AI Reddit Recap

AI Discord Recap

CUDA MODE Discord

AI Community Discords

Unsloth AI and LlaMA Model Development

LM Studio Hardware Discussion

GPU Power Consumption and Model Optimization Discussions

Fine-Tuning Language Models and Image Models

CUDA Transformations Discussion

Vector Visualization and GPT Performance

Mojo Projects and Updates

Identifying HuggingFace Dataset Types and Challenges in Fine-tuning

AI Community Updates on Various Toolkits and Projects

Challenges and Innovations in Llama Farm and AI-Town Development

AI Twitter Recap and AI Reddit Recap

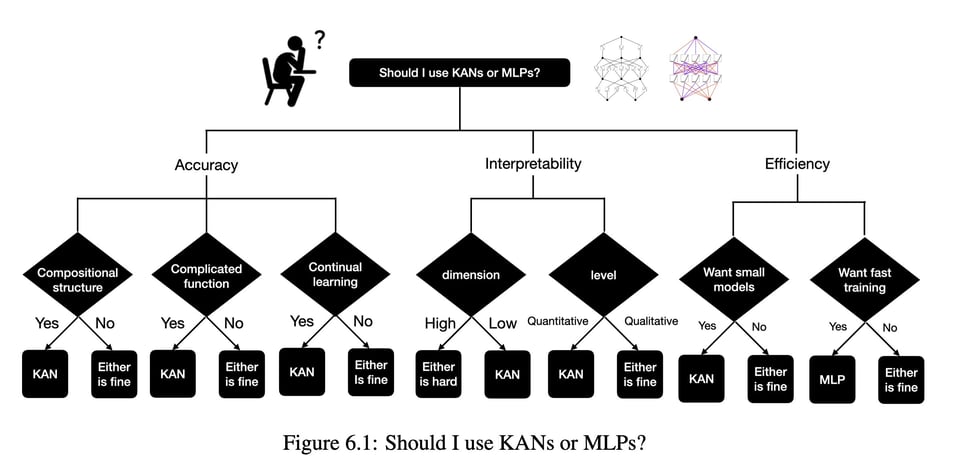

The AI Twitter Recap section covers updates on GPT models, safety testing, and AI-generated images from OpenAI, as well as Microsoft's AI developments and releases like Copilot Workspace. The AI Reddit Recap highlights Google's medical AI outperforming GPT and doctors, Microsoft's development of a large language model, and an AI system claiming to eliminate 'hallucinations'. Both sections also discuss AI ethics, societal impact, technical developments like the Kolmogorov-Arnold Network and DeepSeek-V2, and advancements in stable diffusion and robotics. Overall, these recaps provide a comprehensive overview of recent AI advancements, controversies, and innovations.

AI Discord Recap

Summary:

- Engineers and developers discussed various AI-related topics, including model performance optimization, fine-tuning challenges, and open-source AI developments. Examples include techniques like quantization for running Large Language Models on individual GPUs, strategies for prompt engineering to influence model performance, and collaborations on machine learning paper predicting IPO success. The discussions also covered hardware considerations for efficient AI workloads, such as GPU power consumption and PCI-E bandwidth requirements. Moreover, advancements in open-source AI projects like DeepSeek-V2 and collaborations like OpenDevin were highlighted. Different Discord channels like Unsloth AI, Nous Research AI, and Stability.ai shared insights and challenges faced in the field of AI development.

CUDA MODE Discord

GPU Clock Speed Mix-Up: Discussions began with confusion over the clock speed of H100 GPUs, corrected from 1.8 MHz to 1.8 GHz, emphasizing clarity in technical specifications. Tuning CUDA: From Kernels to Libraries: Members shared insights on optimizing CUDA operations, highlighting Triton's efficiency in kernel design and the advantage of fused operations. PyTorch Dynamics: Various issues and improvements in PyTorch were discussed, including troubleshooting dynamic shapes and working with PyTorch Compile. Transformer Performance Innovations: Conversations focused on boosting efficiency with Dynamic Memory Compression and discussions on quantization methods. CUDA Discussions Heat Up in llm.c: The channel addressed multi-GPU training hangs and optimization opportunities using NVIDIA Nsight™ Systems, alongside the release of FineWeb dataset for LLM performance and potential kernel optimizations.

AI Community Discords

This section provides updates and discussions from various AI community Discords such as LlamaIndex, tinygrad (George Hotz), Cohere, Latent Space, AI Stack Devs, Mozilla AI, Interconnects, DiscoResearch, LLM Perf Enthusiasts, and Alignment Lab AI. The content includes information on webinars featuring OpenDevin's authors, upgrades to Hugging Face's TGI toolkit, challenges integrating LlamaIndex with Supabase Vectorstore, discussions on AI agent constructions, and advancements in models like DeepSeek-V2. Additionally, issues related to model optimization, benchmarking, tool functionalities, and community collaborations are highlighted across the different Discord channels.

Unsloth AI and LlaMA Model Development

A member successfully used LORA by training with load_in_4bit = False, saving LORA adapters separately, and converting them using a specific llama.cpp script, which resulted in perfect results. Inquiries about deployment using local data for fine-tuning models and the ability to use multiple GPUs for training with Unsloth were discussed, with the current conclusion that Unsloth does not yet support multigpu but it may in the future. Additionally, new LlaMA models have been developed to aid knowledge graph construction and structured data like RDF triples. A new Instruct Coder Model has been released with improvements over its predecessor, and Oncord, a professional website builder, has been presented. There's also an open call for collaboration on a machine learning paper predicting IPO success, and discussions on startup marketing strategies. Members are also interested in enhancing multimodal language models and pushing for higher resolution in image interpretation.

LM Studio Hardware Discussion

- Server Logging Off Option Request: A user desires the ability to turn off server logging via the LM Studio GUI for increased privacy during app development.

- Recognition of Prompt Engineering Value: Prompt engineering is acknowledged as a valuable skill in tech, crucial for maximizing LLM outputs.

- Headless Mode Operation for LM Studio: Discussing running LM Studio in headless mode via the command line for server mode operations.

- Phi-3 vs. Llama 3 for Quality Outputs: Debate on the effectiveness of Phi-3 model vs. Llama 3 for tasks like content summarization and FAQ generation.

- Model Crashes Troubleshooting: Users report issues with model performance, suggesting checks on drivers, config adjustments, and system evaluations for solutions.

- Linux Memory Misreporting: A user reports RAM misreporting in LM Studio on Ubuntu, seeking guidance on version compatibility and library dependency concerns.

GPU Power Consumption and Model Optimization Discussions

GPU Power Consumption Discussions:

- A user observed their P40 GPUs idling at 10 watts but never dropping below 50 watts after use, with a total draw of 200 watts from the GPUs. They shared server setup details involving power supplies and noise mitigation.

Planning GPU Power Budget for Inference:

- Another user discussed limiting their GPU to 140 watts for 85% performance on models like 7b Mistral and inquired about LM Studio's utilization of multiple GPUs.

Assessing Gaming Mobo for P40s Without Additional GPUs:

- A user pondered using gaming motherboards for P40s due to PCIe bandwidth differences.

Debunking PCI-E Bandwidth Myths for Inference:

- A user shared Reddit and GitHub links suggesting overestimation of PCI-E bandwidth for inference tasks.

Considering Hardware Configs for Efficient LLM Inferencing:

- Users exchanged ideas about efficient server builds, power consumption, and balance between robust hardware and practicality.

Fine-Tuning Language Models and Image Models

The section discusses how Large Language Models (LLMs) can utilize special tokens for smarter retrievals improving their performance. It also covers the concept of fine-tuning LLMs to decide when to retrieve extra context, leading to more accurate Retrieve-And-Generate (RAG) systems. Additionally, the section highlights various discussions on image models, including experimenting with Darknet Yolov4, searching for datasets like UA-DETRAC, optimizing training with Convnext for efficient training, training on multi-label image classification, and choosing models for face recognition and keypoints detection. The exchange on HuggingFace tackles adding new models to Transformers and focuses on debugging model behavior in different cloud clusters. The section also explores the implementation of personalization techniques for image generation models with Custom Diffusion and its impact on memory optimization, as well as community inquiries about AI model training, fine-tuning, and cost estimation based on token counts.

CUDA Transformations Discussion

In this section, users in the Discord channel discuss a variety of topics related to CUDA transformations. Troubleshooting dynamic shapes in PyTorch compilation and issues posted on PyTorch's GitHub are explored, along with boosting transformer efficiency through Dynamic Memory Compression (DMC). Members also inquire about beginners' clock speed confusion, PyTorch Torch Compile for Triton backend, and model optimization using BetterTransformer in the Hugging Face ecosystem. Additionally, discussions touch on Tiling in matrix transpose operations, multi-chip model training efficiency with a visual example of layerwise matrix multiplication, and confusion over optimizations in the book exercises. Moreover, topics include GPU memory allocation in Metal, Lightning AI Studio feedback, Triton language presentation proposal, and accessing self-hosted runners on GitHub for GPU testing. The section also covers the sharing of GitHub pull requests for PyTorch.org with new accelerators dropdown options and the preview of PyTorch.org highlighting features like TorchScript, TorchServe, and PyTorch's 2.3 version updates.

Vector Visualization and GPT Performance

In the section about Vector Visualization, the challenges of visualizing vectors in GPT's 256 dimensions were discussed. It was highlighted that GPT-4's performance remains consistent across demand, with everyone receiving the same 'turbo model.' Additionally, the misconception of using an inferior model to manage demand was debunked, emphasizing the cost-effectiveness of investing in servers. The section also delved into struggles with Twitter data integration for LLMs, negative prompting pitfalls, prompt expansion advice for product identification, challenges with DALL-E prompt specificity, and scripting for improved responses with logit bias. The subsequent parts of the content detailed discussions on GPT models struggling with tailored responses, prompt engineering best practices, DALL-E's difficulty with negative prompts, tackling unwanted tokens via logit bias, and step-wise improvement in API prompt responses.

Mojo Projects and Updates

- Mojo-sort Updated and Enhanced: The mojo-sort project has been updated to work with the latest Mojo nightly. It now includes a more efficient radix sort algorithm for strings, boasting faster speeds across all benchmarks.

- Help Needed with Lightbug Migration: The Lightbug project is facing issues migrating to Mojo version 24.3, particularly concerning errors that appear to log EC2 locations. Assistance is requested by the developers, with details documented in this GitHub issue.

- Basalt Navigates Mojo's Limitations: The Basalt project adapts to Mojo's current limitations, like the lack of classes and inheritance, by finding workarounds such as using StaticTuple for compile-time lists, but it generally hasn't limited the overall goals.

- A New Port of Minbpe to Mojo: Minbpe.mojo, a Mojo port of Andrej Kathpathy's Python project, has been released. Although currently slower than its Rust counterpart, it runs three times faster than the original Python version, and there's potential for optimization, including possible future SIMD implementations.

- Mojo GUI Library Inquiry: A member expressed interest in finding out if a Mojo GUI library exists, to which there has been no response within the given messages.

Identifying HuggingFace Dataset Types and Challenges in Fine-tuning

- When uncertain about the type of a HuggingFace dataset, the simplest method is to download and open it up to inspect the contents. Alternatively, one can check the dataset's preview for this information.

- A language-specific LLM for Java code assistance is being considered, inspired by IBM's granite models. The interest lies in creating a model that can operate on a standard laptop without a GPU. The member seeks guidance on selecting a base model for fine-tuning, determining the right number of epochs, training size, and quantization to maintain accuracy.

- Challenges were discussed regarding fine-tuning models like orca-math-word-problems-200k, math instruct, and meta-mathQA from Hugging Face, where a decrease in scores for mathematical topics, including mmlu and Chinese math evaluations, were observed. Members also discussed the impact of quantization on model performance.

- Fine-tuning and evaluating strategies were outlined, with concerns raised about correct prompt template usage during evaluation and fine-tuning. The influence of prompt design on model behavior was highlighted, emphasizing the need for accurate comparisons and awareness of potential performance issues. Additionally, the utilization of custom prompt formats like alpaca was mentioned for models being fine-tuned with those examples.

AI Community Updates on Various Toolkits and Projects

The latest updates from different AI communities showcase a range of activities and discussions. From unveiling new features in Hugging Face's TGI toolkit to exploring AI orchestration practices, members engage in diverse topics. Conversations touch upon issues like integrating LlamaIndex with other databases and seeking HyDE for complex NL-SQL chatbots. Additionally, projects like Coral Chatbot in Cohere and simulation collaborations in AI Stack Devs demonstrate ongoing creativity and collaboration within the AI community.

Challenges and Innovations in Llama Farm and AI-Town Development

This section discusses various challenges and innovations in the development of Llama Farm and AI-Town. Highlights include the need for manual table wiping after code updates, an automation suggestion using inotifywait for detecting changes in character definitions, an invitation to try Llama Farm simulation, integration concepts between Llama Farm and AI-Town, plans to generalize Llama Farm's ability to hook into any system, slower model performance on devices, the use of Rocket-3B as a speedier alternative, efficient use of Ollama cache with Llamafile, challenges with AutoGPT and Llamafile integration, a draft PR for Llamafile support in AutoGPT, debates over AI evaluations, the importance of standard benchmarks, exploring Mistral's capabilities, decoding techniques to lower latency, creating a German DPO dataset, seeking input for German pretraining datasets, resource sharing for inclusive language, discussions on using the OpenAI Assistant API with 'llm', and an update on GitHub issue collaboration and inquiry about 'llm' with the OpenAI Assistant API.

FAQ

Q: What is the primary focus of the AI Twitter Recap and AI Reddit Recap sections?

A: The primary focus of the AI Twitter Recap and AI Reddit Recap sections is to cover updates on AI advancements, developments in GPT models, safety testing, AI-generated images, and discussions on AI ethics, societal impact, and technical developments within the AI community.

Q: What were some of the key topics discussed by engineers and developers in the AI community regarding AI-related topics?

A: Engineers and developers discussed topics such as model performance optimization, fine-tuning challenges, open-source AI developments, techniques like quantization for Large Language Models, strategies for prompt engineering, and collaborations on machine learning papers predicting IPO success.

Q: What were some of the hardware considerations discussed for efficient AI workloads in the AI community?

A: Discussions included hardware considerations such as GPU power consumption, PCI-E bandwidth requirements, efficient server builds, and balancing robust hardware with practicality for Large Language Model inferencing.

Q: What were some challenges and discussions related to CUDA transformations and PyTorch dynamics in the AI community?

A: Challenges and discussions included troubleshooting dynamic shapes in PyTorch, boosting transformer efficiency with Dynamic Memory Compression, clock speed confusion, model optimization using BetterTransformer, and GPU memory allocation in Metal.

Q: What were some of the recent updates and discussions in the AI community regarding Vector Visualization and image models?

A: Recent updates included discussions on visualizing vectors in GPT models, utilizing special tokens for smarter retrievals in Large Language Models, fine-tuning LLMs for more accurate RAG systems, experimenting with image models like Darknet Yolov4, optimizing training for multi-label image classification, and debugging model behavior in cloud clusters.

Q: What are some of the recent projects and developments mentioned in the AI community, such as Mojo-sort, Lightbug migration, and Minbpe to Mojo port?

A: Recent projects and developments include updates in mojo-sort for faster speeds, challenges in Lightbug migration to Mojo version 24.3, Basalt project adaptations, Minbpe.mojo port release running faster than its Python version, and inquiries about the existence of a Mojo GUI library.

Q: What were some challenges and discussions surrounding fine-tuning models, evaluation strategies, and prompt engineering in the AI community?

A: Challenges and discussions included issues with fine-tuning models like orca-math-word-problems-200k and meta-mathQA, impact of quantization on model performance, correct prompt template usage during evaluation and fine-tuning, and the influence of prompt design on model behavior.

Q: What were some of the activities and discussions within AI communities, from Hugging Face's TGI toolkit to AI orchestration practices?

A: Activities and discussions included new features in Hugging Face's TGI toolkit, exploring AI orchestration practices, AI chatbot integrations, simulation collaborations, and ongoing creativity and collaboration within the AI community.

Q: What were some of the challenges and innovations discussed in the development of Llama Farm and AI-Town in the AI community?

A: Challenges and innovations discussed included automation suggestions for detecting character definition changes, integration concepts between Llama Farm and AI-Town, model performance improvements, use of alternative models like Rocket-3B, challenges with AutoGPT and Llamafile integration, and discussions on AI evaluations and standard benchmarks.

Q: How did the AI community address issues related to GPU Power Consumption and Planning GPU Power Budget for Inference?

A: The AI community discussed observations of GPU power consumption, planning GPU power budgets for inference tasks, assessing gaming motherboards for GPU usage, debunking PCI-E bandwidth myths, and considering hardware configurations for efficient Large Language Model inferencing.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!