[AINews] Gemini 2.0 Flash GA, with new Flash Lite, 2.0 Pro, and Flash Thinking • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recaps

Open Source in AI Development

Deep Learning Community Updates

Mojo Compiler and Discord Updates

Chain of Agents Community Interest and Practical Implementation

Exploring New Features and User Feedback

ML Theory and Optimization Discussion

LM Studio Hardware Discussion

Yannick Kilcher Discussion: NURBS vs Meshes, AI Reasoning Models, Perspective and Transformation

Use Cases and Features Overview

Parallel Generation Strategies and Performance Comparisons

GPU Mode Discussions

Live Event Announcement and Community Engagement

AI Twitter and Reddit Recaps

AI Twitter Recap

-

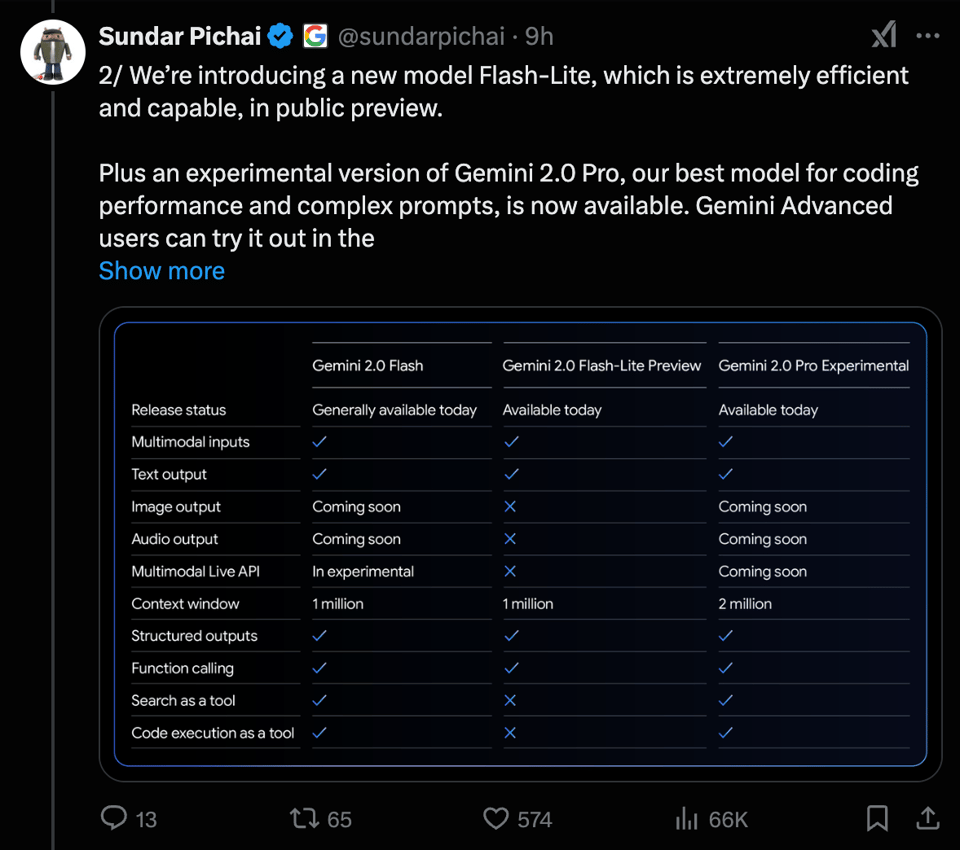

Google DeepMind Launches Gemini 2.0 Models: Google DeepMind announced the availability of Gemini 2.0 models like Flash, Flash-Lite, and Pro Experimental, offering features such as multimodal input and cost-efficiency.

-

ChatGPT Deep Dive by Andrej Karpathy: Andrej Karpathy released a comprehensive YouTube video on Large Language Models, covering pretraining, fine-tuning, and reinforcement learning.

-

Free Course on Transformer LLMs: Jay Alammar and Maarten Gr introduced a free course diving deep into Transformer architecture and mixture-of-expert models.

AI Reddit Recap

/r/LocalLlama Recap

-

DeepSeek VL2 Small Launch: DeepSeek introduced a powerful demo for DeepSeek VL2 Small, showcasing capabilities in OCR, text extraction, and chat use-cases.

-

DeepSeek R1's Benchmark Success: DeepSeek R1 has reached 1.2 million downloads since its launch on Hugging Face, with a detailed technical report available.

Open Source in AI Development

DeepSeek issued a 'Terminology Correction' regarding their DeepSeek-R1, clarifying their code and models are not fully open-source but under the MIT License. Users discuss the importance of access to source code for true open-source projects. This highlights a broader issue in the AI community where models lack details to replicate training processes.

Deep Learning Community Updates

The sections discussed a variety of updates and issues within the deep learning community. From Windsurf's new features to discussions on Codeium plugin struggles and cursor advantages, users shared their experiences and concerns. In the Stability.ai Discord, new leadership aims to enhance community engagement, while users explore nested AI architectures and challenges in training models. The Cursor IDE channel highlighted new server integration features and debated the coding capabilities of Gemini 2.0. Perplexity AI users expressed frustration over UI changes and model access limitations, sparking discussions on performance and accessibility. Additionally, the sections covered topics like futuristic AI models, voice dictation tools, and potential mobile app development for coding. Across various platforms like LM Studio and HuggingFace, users shared struggles with GPU limitations, model recommendations, and research insights. The discussions also touched on emerging technologies like NURBS, advancements in AI reasoning models, and debates on AI ethics and infrastructure teams' contributions.

Mojo Compiler and Discord Updates

The Mojo compiler has transitioned to closed source, creating anticipation among compiler enthusiasts for access to inner workings in MLIR but will be available at the end of 2026. Modular aims to open-source the Mojo compiler by Q4 2025. The debate surrounds Mojo's standard library evolving into a general-purpose library. Async functions in Mojo spark discussions on new syntax proposals and the potential challenges of maintaining separate async and sync libraries. In the NotebookLM Discord, AI automates drafting legal documents, members experiment with avatars in contract review, NotebookLM Plus activation issues arise, and users express concerns about spreadsheet integration. Torchtune Discord discusses Torchtune's memory management, Ladder-residual modification accelerating the Llama model, Kolo Docker tool's official support for Torchtune, Tune Lab UI development for Torchtune, and success with a GRPO implementation. Latent Space Discord talks about OpenAI's SWE Agent, OmniHuman video generation project, Figure AI's departure from OpenAI, Gemini 2.0 Flash's general availability, and Mistral AI's platform rebranding. Nomic.ai Discord introduces GPT4All v3.9.0 with new features, a new ReAG approach for creating context-aware responses, discussions on self-hosting GPT4All, local models for NSFW content, and reported UI bugs. MCP Discord shares updates on ChatGPT Pro interest, Excel MCP development discussions, experiences with Playwright and Puppeteer, Home Assistant's support for MCP client/server, and PulseMCP showcasing use cases of MCP. GPU MODE Discord highlights Flux's image generation efficiency, OmniHuman's realistic human video generation, FlowLLM for material discovery, Modal's hiring for ML performance engineers, and Torchao compatibility issues. LlamaIndex Discord features Deepseek forum, RAG app tutorial, Gemini 2.0 support, LlamaIndex LLM class observation, and troubleshooting with Qwen-2.5. Cohere Discord discusses users pondering Embed v3 migration, desire for Cohere's moderation model, inquiries about chat feature pricing, struggles with conversational memory, and community reminders on conduct code. Tinygrad (George Hotz) Discord addresses version 0.10.1 errors, compiler flag concerns, debugging improvements, and inquiries on base operations and kernel implementations. Gorilla LLM Discord covers API endpoint access, the sufficiency of user data in training, and the application of the Raft method with Llama 3.1 7B. Lastly, DSPy Discord introduces a Chain of Agents implemented the DSPy way and a search for a Git repository for the discussed Chain of Agents example.

Chain of Agents Community Interest and Practical Implementation

The community has shown a strong interest in practical hands-on implementations of the Chain of Agents concept. Discussions in various Discord channels highlighted the utilization of Aider in managing errors and code refactoring, comparisons between different models like O3 Mini and Claude, integration of AI tools like DeepClaude with OpenRouter, and limitations of Large Language Models (LLMs) in complex tasks. Users also discussed utilizing Perplexity combined with R1 for research tasks and the potential synergies between the two. The section also includes links to further resources mentioned in the discussions.

Exploring New Features and User Feedback

The section introduces 'Windsurf Next,' encouraging users to explore innovative features and improvements in AI for software development. Minimum requirements for installation are specified for different operating systems. The subsequent discussions in the Discord channel cover various topics including concerns over tool use credit consumption, skepticism about new AI tools, the introduction of the Runic framework, user issues with Codeium JetBrains plugin, and debates on Windsurf vs Codeium. Users share experiences, feedback, and suggestions for improvements, aiming to enhance overall usability and user experience.

ML Theory and Optimization Discussion

Struggles with ML Theory and Optimization:

Members discussed the challenges of convex optimization in practical scenarios due to data roughness.

- Idealized nature of convex optimization compared to real-world scenarios was likened to building a perfect bike for flat surfaces.

Introduction of Harmonic Loss as an Alternative:

A new paper introduced harmonic loss as an alternative to cross-entropy loss, with benefits like improved interpretability and faster convergence.

- Skepticism about its novelty and potential to shift optimization targets was noted.

Interest in Collaborating on ML Projects:

A member expressed interest in collaborating on research projects related to LLM agents.

- Emphasis was placed on working on novel problems and encouraging teamwork.

Thoughts on Diffusion Models:

Discussion arose on the strengths of diffusion models compared to other ML subdisciplines.

- Theoretical advancements were highlighted for image generation.

Statistical Foundations in ML:

Many expressed a desire for stronger statistical foundational knowledge in ML for better comprehension.

- A perceived gap in statistical literacy among practitioners was underscored.

LM Studio Hardware Discussion

In this section, users discuss various hardware-related topics related to running LM Studio. Discussions include challenges faced when running LM Studio on older CPUs and GPUs, model recommendations for specific hardware configurations, enabling Vulkan support for better GPU utilization, integrating GPT-Researcher with LM Studio, and the support for image and video models in LM Studio. Users also share insights on GPU performance, rising prices, memory configurations for inference, and VRAM requirements for running larger models comfortably.

Yannick Kilcher Discussion: NURBS vs Meshes, AI Reasoning Models, Perspective and Transformation

- NURBS offer advantages over traditional meshes: NURBS provide parametric and compact representations ideal for accurate simulations, while modern shaders have addressed previous texturing challenges.

- Emerging affordable AI reasoning models: The s1 reasoning model mimics OpenAI's capabilities at a reduced cost using a distillation approach from Google's Gemini 2.0.

- Importance of perspective in computational modeling: Upper-degree algebras like PGA and CGA are crucial for handling geometric relationships and perspectives accurately.

- Challenges in 3D mesh topology: Efforts are ongoing to enhance mesh topologies for various applications, with a shift towards dynamic models like NURBS and SubDs.

- Static vs Dynamic Approaches in AI: Static designs face challenges in dynamic applications, highlighting the need for evolving methodologies for computational models and AI agents.

Use Cases and Features Overview

This section discusses various applications and developments related to AI tools in different domains. Users are exploring the potential of AI in legal practices by utilizing it for drafting legal documents efficiently and enhancing the contract review process with avatars. The use of AI tools to monitor narrative strength and introduce creativity sliders for content generation is also highlighted. Furthermore, the section covers the effectiveness of AI in creating deposition summaries and addresses challenges with data analysis using NotebookLM. Users also shared experiences with accessing NotebookLM, uploading sources, and utilizing audio overview features. Additionally, the Torchtune platform is praised for its performance compared to Unsloth, with new integrations like Kolo and Tune Lab enhancing its capabilities in training and testing models.

Parallel Generation Strategies and Performance Comparisons

This section discusses various parallel generation strategies and performance comparisons of different models. It covers topics such as the efficiency of different models like Emu3 and Flux in image generation, the introduction of innovative models like EvaByte for auto-regressive image generation, and proposed methodologies to improve speed in autoregressive models. Members also discuss the potential of byte transformers in image modeling and inquire about models using byte transformers for modalities beyond text.

GPU Mode Discussions

- GPU Invalidations Overview: Heavy fences can lead to L1 invalidate ops.

- NVIDIA may not track specific lines for invalidation.

- Microbenchmarking: Insights may require running microbenchmarks.

- AI Compute Efficiency: Focus on making AI more efficient.

- BlockMask Support Inquiry: Member asks about BlockMask support.

- Question on Pickling BlockMask: Member inquires about pickling BlockMask.

- Proposed BlockMask Issue: Better first issue proposed regarding BlockMask.

- Example Code on BlockMask Functionality: Code snippet shared for BlockMask implementation.

- OmniHuman Framework: Generates realistic human videos from single image.

- FlowLLM for Material Discovery: Combines large language models for material generation.

- Video Generation from Images: OmniHuman can generate high-quality videos from single images.

- Part-Time AI Engineer Position Available: Remote positions for Part-Time AI Engineer.

- Modal Powers High-Performance Computing: Serverless computing platform with GPU Glossary.

- Hiring ML Performance Engineers: Modal is hiring ML performance engineers.

- Torchao and torch.compile Compatibility: Possible incompatibility reported.

- PyTorch Issue Discussion: Discussion on PyTorch issue #141548.

- Community Engagement on GitHub: Encouragement for GitHub commentary.

- AI Exploits Gamebreaking Bug in Trackmania: AI trained to master game technique.

- Release of General-Purpose Robotic Model π0: Code and weights released for general-purpose robotic model.

- Innovative Fax-KI Service Launch: Fax machines transformed into intelligent tools.

- New Updates on Gemini 2.0: Gemini 2.0 now generally available.

- Timeout Implementation in LlamaIndex Models: Discussion on timeout feature in LlamaIndex models.

- Function Calling with Qwen-2.5: Issues with function calling and Qwen-2.5 model.

- Streaming Text in AgentWorkflow: Challenges streaming text down to clients.

- Using OpenAILike for Qwen-2.5: Implementing OpenAILike for function calling.

- Limitations of Tool Call Streaming: Difficulty in streaming tool call outputs.

- MOOC Certificate Delays: Members express frustration over delays in certificates.

- Quiz Availability and Technical Issues: Queries about quiz availability and technical issues.

- Fixed Lecture 1 Video Confirmation: Confirmation of professional captioning on lecture video.

- Seeking Advice for Embed v3 Migration: User seeks advice on migrating to Embed v3 light.

- Desired Moderation Model for Cohere: Desire expressed for moderation model.

- Chat Feature Subscription Inquiry: Inquiry about paid subscription for chat feature.

- Estimating Chat Interaction Costs: User uncertain about the cost of chat interactions.

- Summary: Continuation of diverse discussions and inquiries in the GPU Mode and Cohere channels.

Live Event Announcement and Community Engagement

- A member shared a link to a live event, providing both a Discord event link and a Google Meet link.

- This conveys ongoing community engagement and opportunities for direct interaction.

FAQ

Q: What is Google DeepMind's Gemini 2.0 models and what features do they offer?

A: Google DeepMind's Gemini 2.0 models like Flash, Flash-Lite, and Pro Experimental offer features such as multimodal input and cost-efficiency.

Q: What topics did Andrej Karpathy cover in his YouTube video on Large Language Models?

A: Andrej Karpathy covered topics like pretraining, fine-tuning, and reinforcement learning in his comprehensive YouTube video on Large Language Models.

Q: What did Jay Alammar and Maarten Gr introduce in their free course on Transformer architecture?

A: Jay Alammar and Maarten Gr introduced a free course diving deep into Transformer architecture and mixture-of-expert models.

Q: What was the DeepSeek VL2 Small launch about?

A: DeepSeek introduced a powerful demo for DeepSeek VL2 Small, showcasing capabilities in OCR, text extraction, and chat use-cases.

Q: What clarification did DeepSeek provide regarding their DeepSeek-R1 model?

A: DeepSeek clarified that their code and models under MIT License are not fully open-source, sparking discussions on the importance of source code access in true open-source projects.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!